![SOLVED: Definition: The mutual information between two random variables X and Y, denoted as I(X; Y), is given by the equation: I(X; Y) = âˆ'âˆ' P(x, y) log [P(x, y) / (P(x)P(y))] SOLVED: Definition: The mutual information between two random variables X and Y, denoted as I(X; Y), is given by the equation: I(X; Y) = âˆ'âˆ' P(x, y) log [P(x, y) / (P(x)P(y))]](https://cdn.numerade.com/ask_images/ea727d3f82d94147bde6679a6365a38d.jpg)

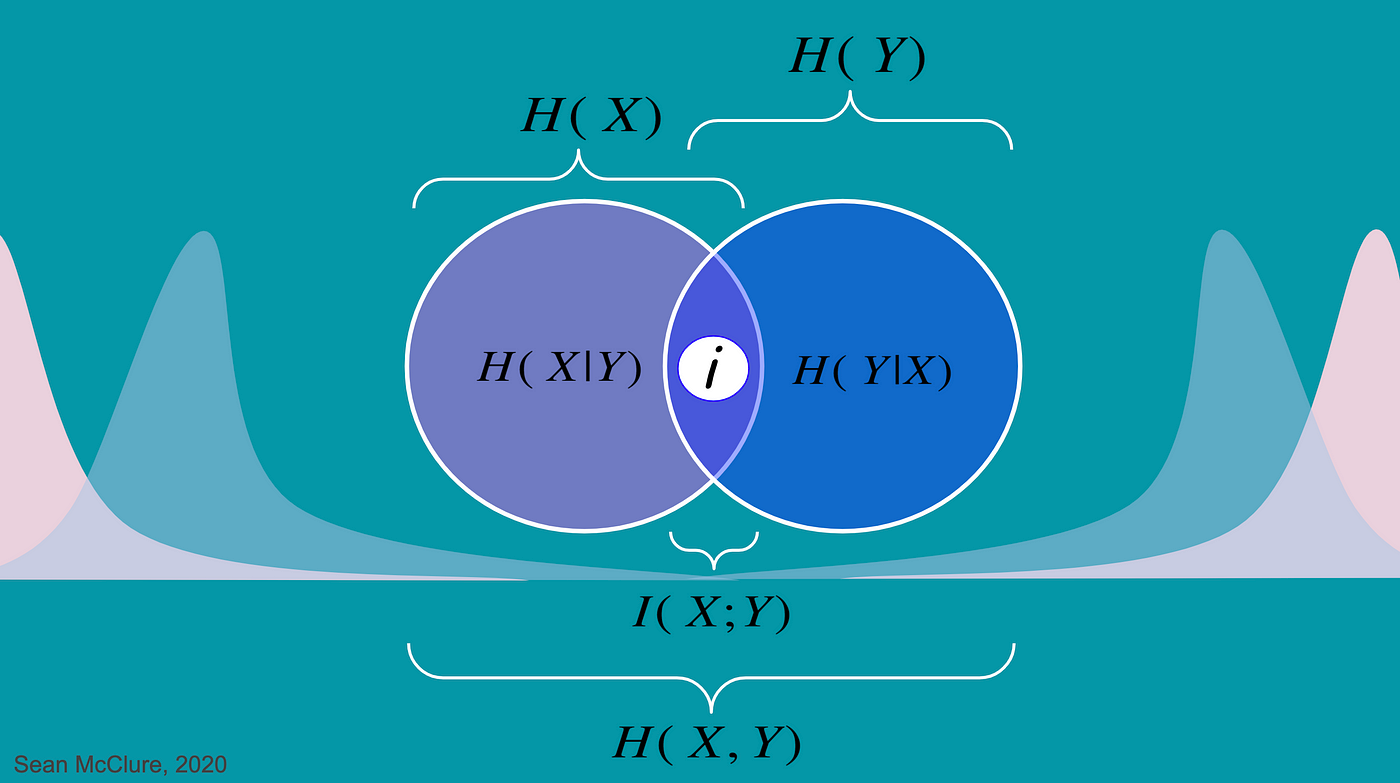

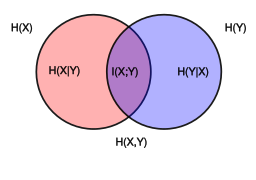

SOLVED: Definition: The mutual information between two random variables X and Y, denoted as I(X; Y), is given by the equation: I(X; Y) = âˆ'âˆ' P(x, y) log [P(x, y) / (P(x)P(y))]

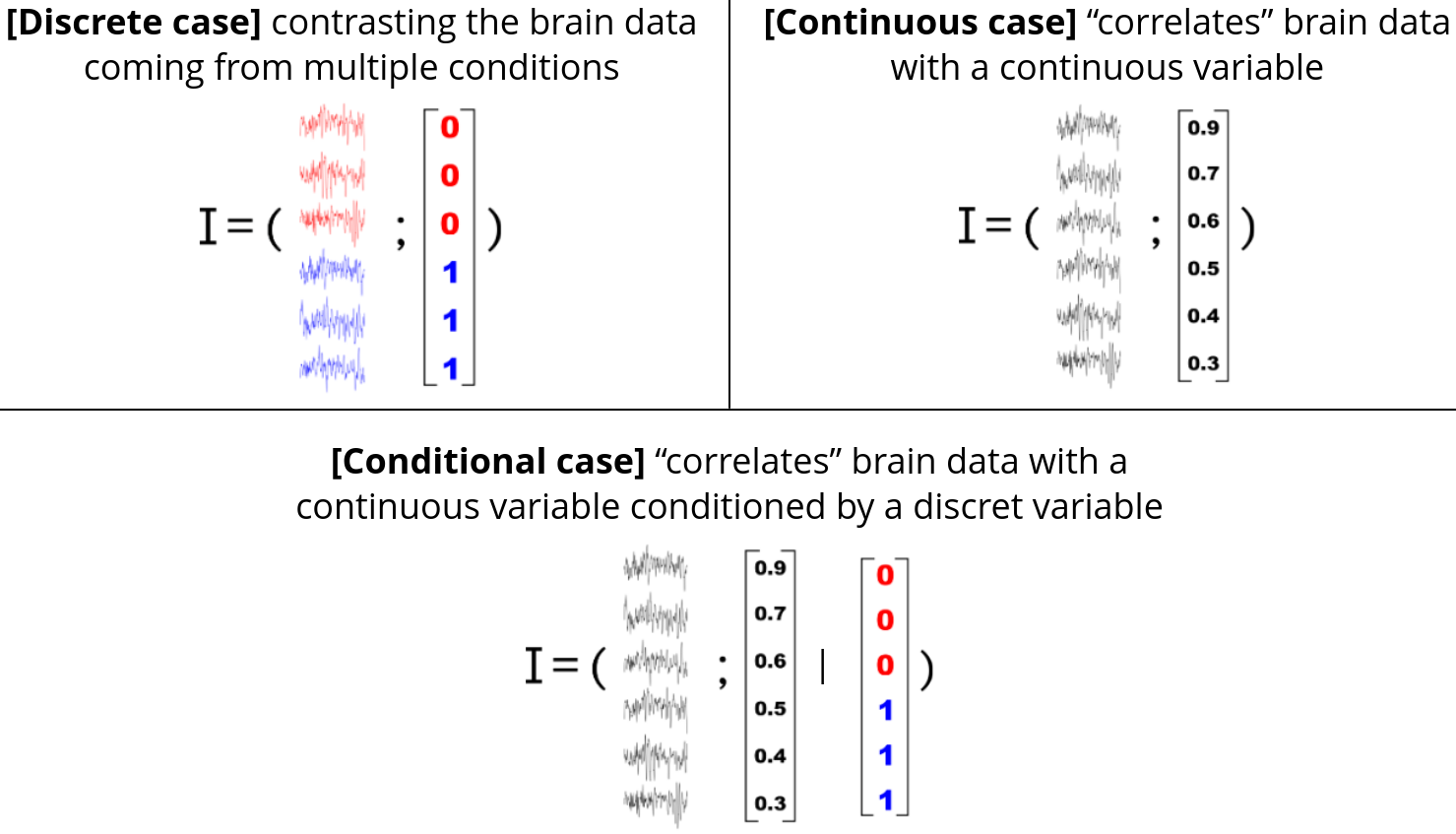

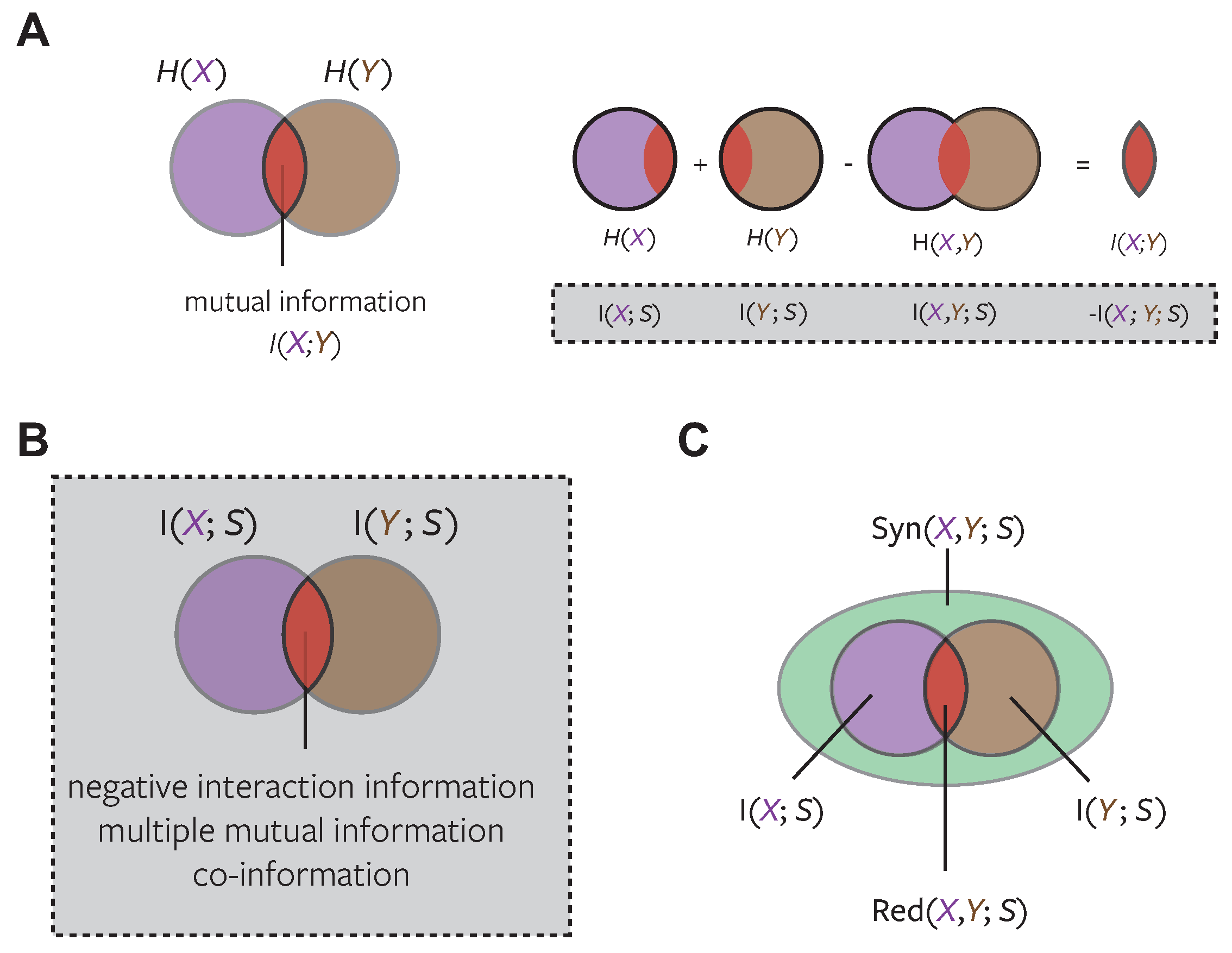

A scheme of our method to estimate the mutual information between two... | Download Scientific Diagram

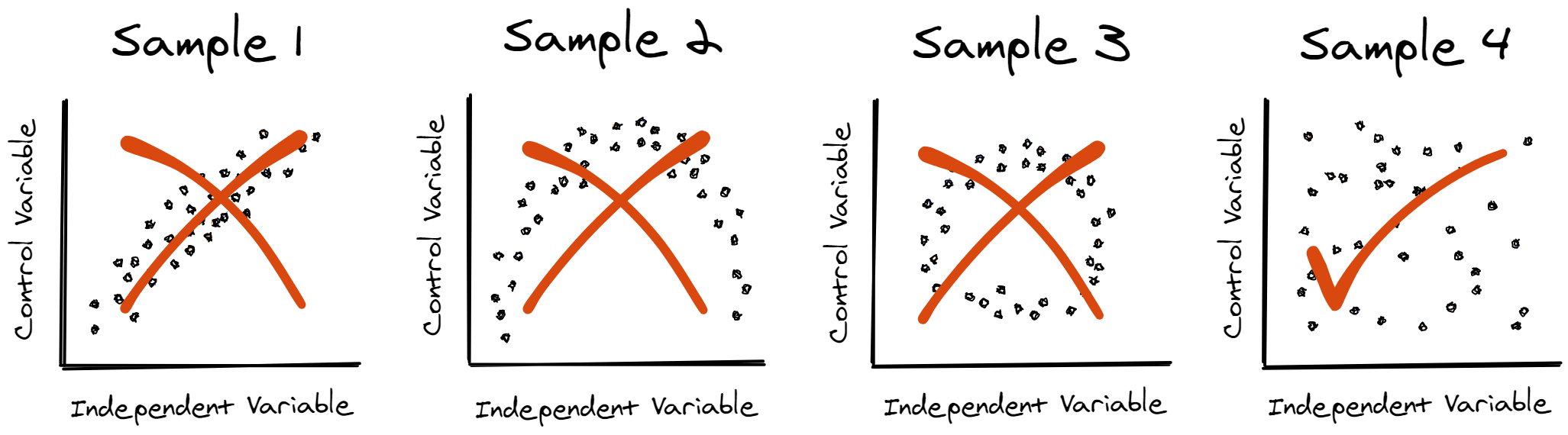

SciELO - Brasil - Rényi entropy and cauchy-schwartz mutual information applied to mifs-u variable selection algorithm: a comparative study Rényi entropy and cauchy-schwartz mutual information applied to mifs-u variable selection algorithm: a

Conditional Mutual Information Estimation for Mixed Discrete and Continuous Variables with Nearest Neighbors | DeepAI

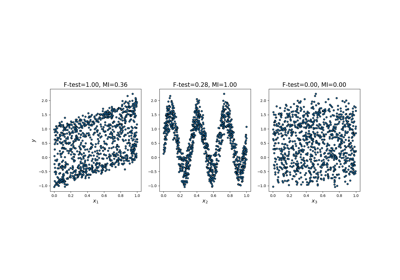

SciELO - Brasil - Rényi entropy and cauchy-schwartz mutual information applied to mifs-u variable selection algorithm: a comparative study Rényi entropy and cauchy-schwartz mutual information applied to mifs-u variable selection algorithm: a

Mutual Information between Discrete Variables with Many Categories using Recursive Adaptive Partitioning | Scientific Reports

Using mutual information to estimate correlation between a continuous variable and a categorical variable - Cross Validated

![PDF] Mutual Information between Discrete and Continuous Data Sets | Semantic Scholar PDF] Mutual Information between Discrete and Continuous Data Sets | Semantic Scholar](https://d3i71xaburhd42.cloudfront.net/ab99cb06acad1011ae0cc791cb7b35b74577cdfc/3-Figure2-1.png)