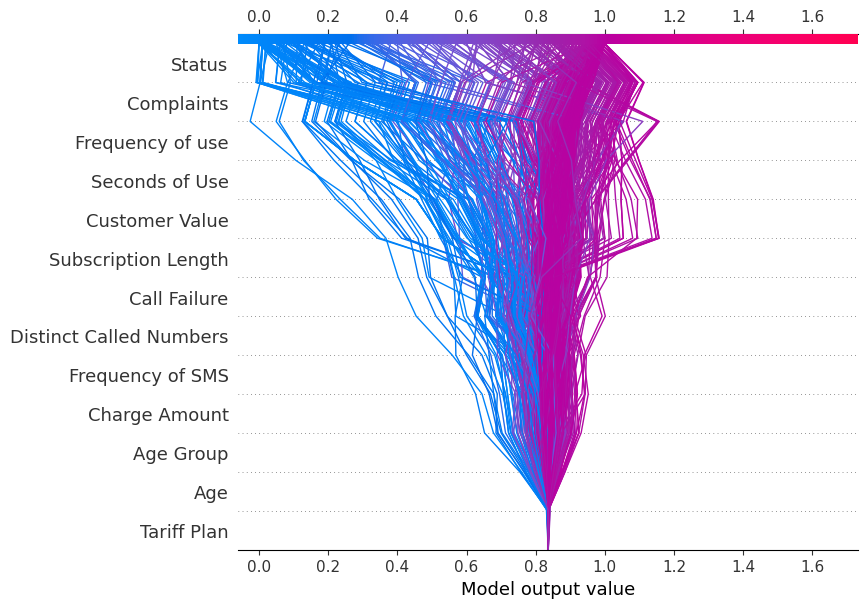

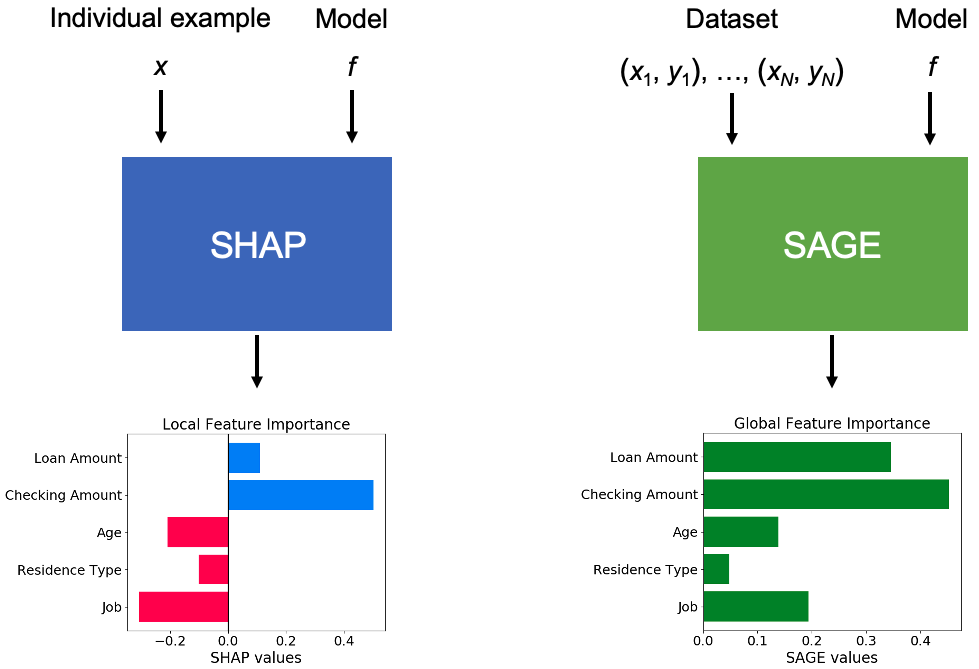

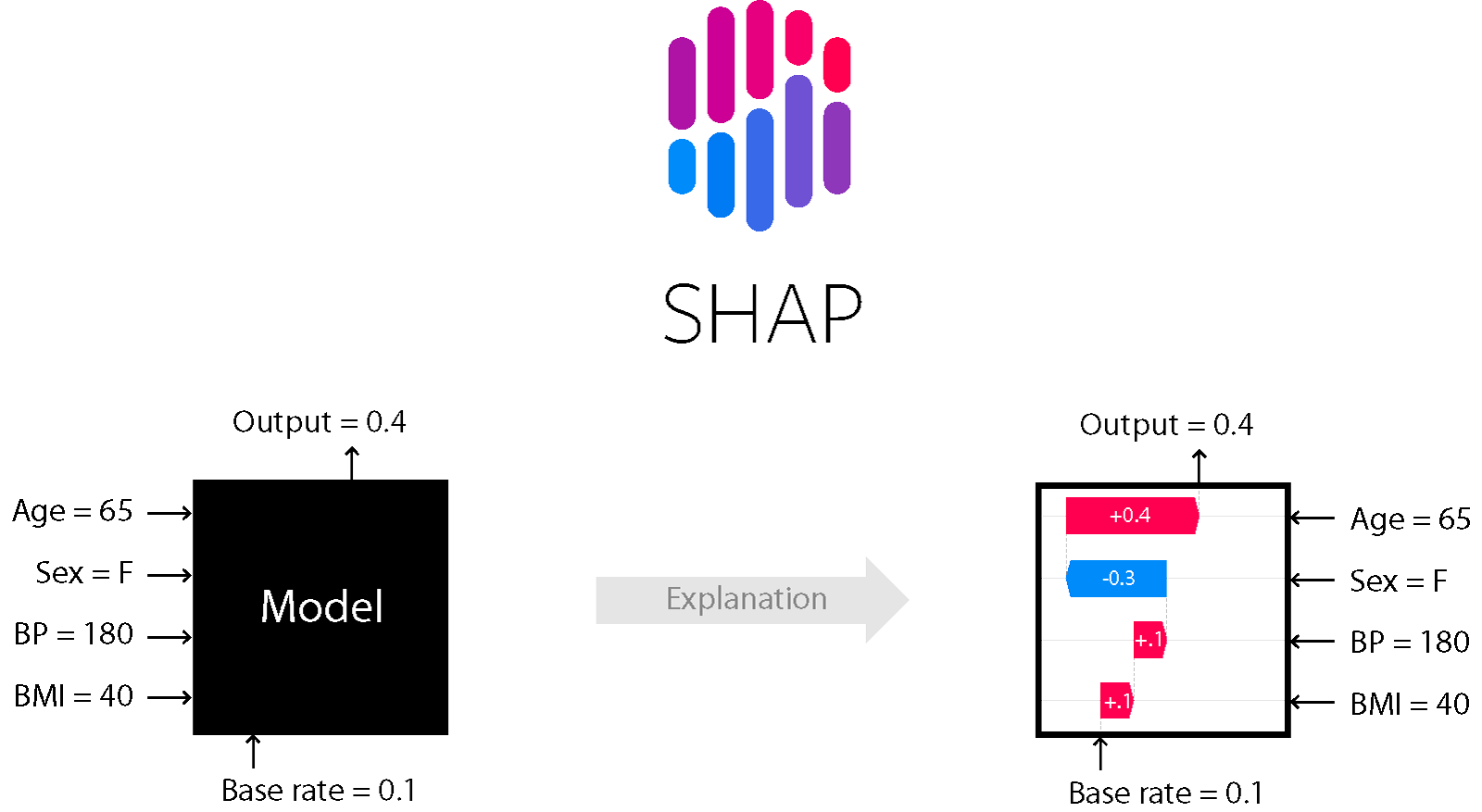

Explainable AI: SHAP Values. Introduction | by Alessandro Danesi | Data Reply IT | DataTech | Medium

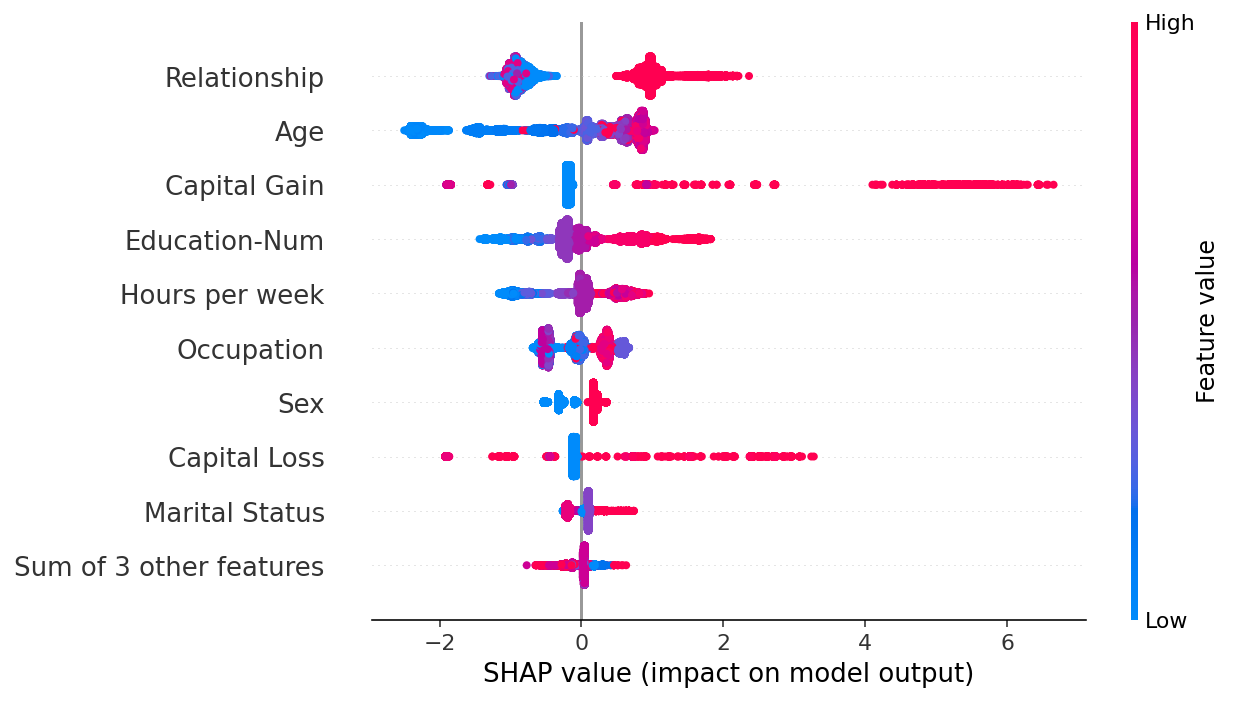

Feature importance based on SHAP values (The red and blue dots indicate... | Download Scientific Diagram

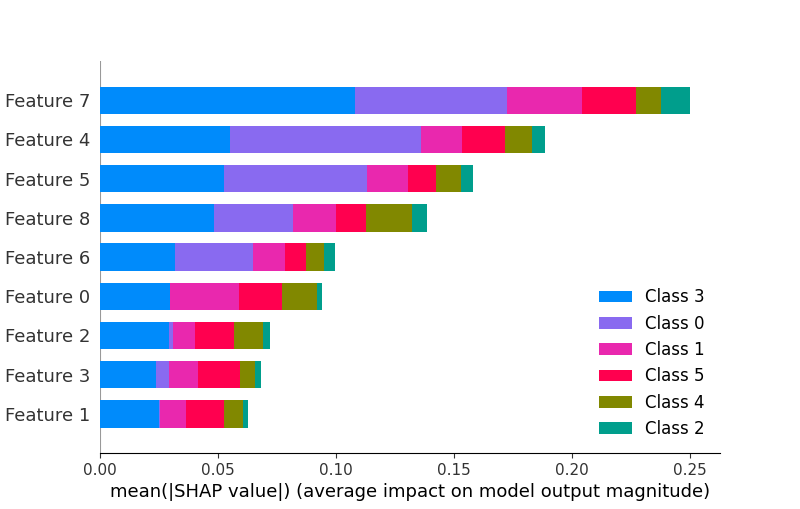

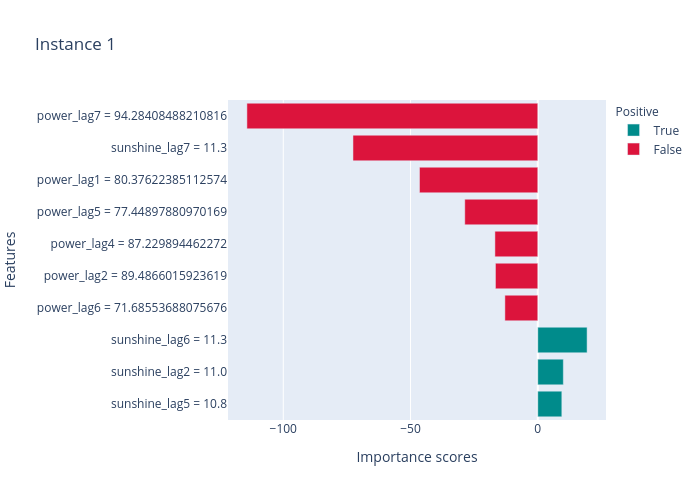

xgboost - Differences between Feature Importance and SHAP variable importance graph - Data Science Stack Exchange

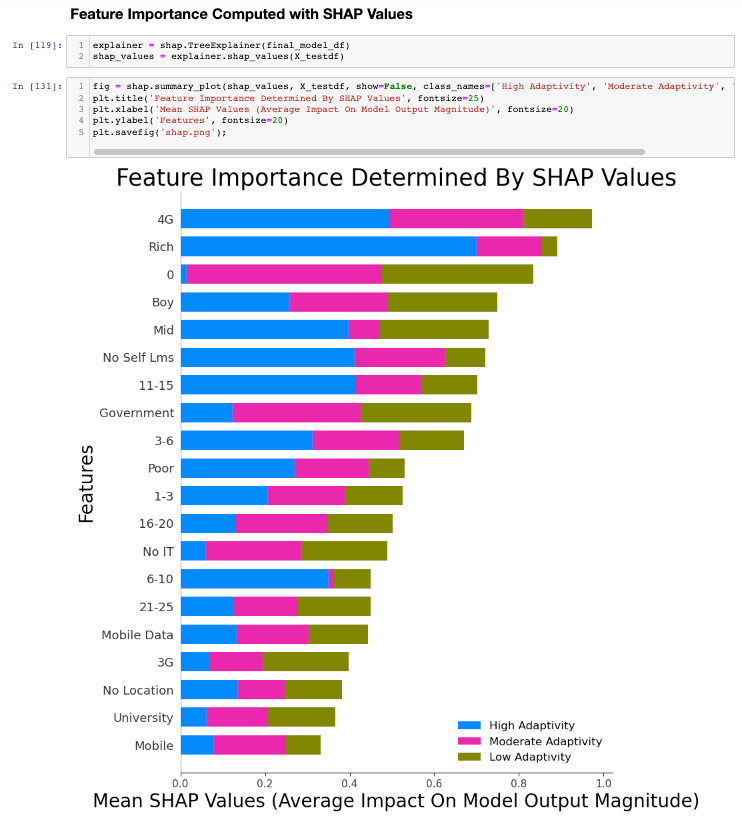

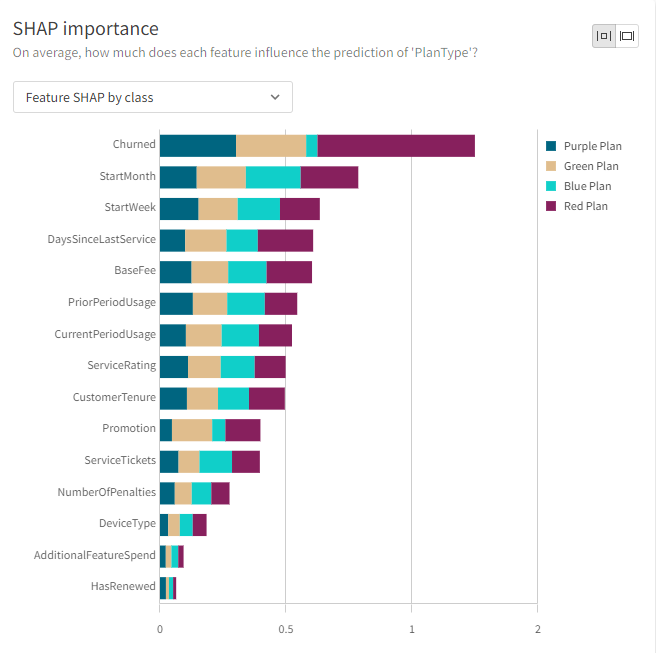

Analytics Snippet - Feature Importance and the SHAP approach to machine learning models - Actuaries Digital - Analytics Snippet – Feature Importance and the SHAP approach to machine learning models | Actuaries Digital